Introduction

In this blog tutorial, we will embark on an exciting journey to integrate two cutting-edge technologies: the Arduino ESP32 and GPT-3.5. The Arduino ESP32 is a versatile development board with built-in Wi-Fi capabilities, while GPT-3.5 is an advanced language model developed by OpenAI. By combining these powerful tools, we can create an interactive chatbot capable of engaging in natural language conversations with users in real time.

The integration process involves establishing a connection between the ESP32 and Chat Completions API by using HTTP. We'll guide you through each step, from setting up the hardware to deploying the code, and finally, we'll explore the possibilities of this project. So, let's dive in and build our own AI-powered chatbot!

Pre-requisites

- Basic knowledge of Arduino and ESP32.

- OpenAI API Key. Visit the OpenAI website to register and get your API Key.

- PlatformIO IDE (Download From: https://platformio.org/install/ide?install=vscode)

What is the GPT-3 Model?

GPT-3, short for "Generative Pre-trained Transformer 3," is a state-of-the-art language model developed by OpenAI. It is designed to generate human-like text and is capable of performing a wide range of natural language processing tasks, from language translation to content creation.

The Chat Completions API

In our ESP32-ChatGPT integration, we specifically use the Chat Completions API which is designed for interactive conversations where a user sends a series of messages, and the GPT-3 model responds accordingly.

Understanding the Chat Completion Creation API Request

The provided example demonstrates how to create a chat completion using the OpenAI API. This request leverages the GPT-3.5-turbo model to generate a response for a chat conversation between a user and an assistant.

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

},

{

"role": "assistant",

"content": "Hello! How can I assist you today?"

}

]

}'Request Components:

-

API Endpoint: The API endpoint used for chat completions is https://api.openai.com/v1/chat/completions. It's through this endpoint that you interact with the OpenAI API to generate responses.

-

Headers:

- "Content-Type: application/json": This header specifies that the content being sent in the request body is in JSON format.

- "Authorization: Bearer $OPENAI_API_KEY": This header includes your API key for authentication. Replace $OPENAI_API_KEY with your actual OpenAI API key.

-

Request Body (Data): The request body is provided in JSON format and contains the necessary information for the chat completion.

- "model": "gpt-3.5-turbo": This indicates the model you want to use for the chat completion. The "gpt-3.5-turbo" model is a highly performant version of the GPT-3 model.

- "messages": This array contains the chat conversation. Each message object includes a "role" and "content".

Roles in GPT-3 Conversations: User, Assistant, and System

In GPT-3 conversations, distinct roles—user, assistant, and system—play essential roles in guiding interactions and shaping the chatbot's responses.

- User Role: The "user" role represents the person engaging with the chatbot. User messages initiate the conversation and set the context. GPT-3 generates responses based on user input, simulating human-like communication.

- Assistant Role: The "assistant" role embodies the chatbot's voice. Assistant messages include responses generated by GPT-3, driven by the conversation's history. The assistant role maintains context, ensuring coherent and relevant exchanges.

- System Role: The "system" role provides instructions to guide the assistant's behavior. Unlike other roles, system messages don't influence the model's responses directly. Instead, they offer high-level context or directives.

So, in simple words, users start the chat, the assistant replies, and sometimes you can guide the assistant's behavior with special instructions. This mix of roles makes conversations with the chatbot feel more natural and helpful.

Understanding the Chat Completion Creation API Response

Once you've made a request to the OpenAI API for chat completions, you'll receive a response containing valuable information about the generated completion. Let's break down the components of a typical API response and understand what each element signifies.

Here's an example response:

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"model": "gpt-3.5-turbo-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21

}

}Response Components:

-

"id": This unique identifier ("chatcmpl-123" in the example) helps track and manage the specific chat completion you've requested.

-

"object": Specifies the type of object in the response, in this case, "chat.completion."

-

"created": Timestamp indicating when the chat completion was created (in Unix timestamp format).

-

"model": The specific model that was used for generating the completion ("gpt-3.5-turbo-0613" in the example).

-

"choices": An array containing the generated completions. In this case, there's one completion.

-

"index": The index of the completion (usually 0 when there's only one completion).

-

"message": The generated message, which includes the "role" ("assistant") and the content of the message.

- "role": The role of the message ("assistant" in this case).

- "content": The actual content of the message generated by the assistant.

-

"finish_reason": Specifies why the completion was finished. It might be "stop" or other reasons depending on the conversation's context.

-

-

"usage": Provides information about token usage.

- "prompt_tokens": The number of tokens used in the prompt (input messages).

- "completion_tokens": The number of tokens used in the completion (output message).

- "total_tokens": The total number of tokens used (prompt + completion).

Integrating GPT-3.5 with ESP32

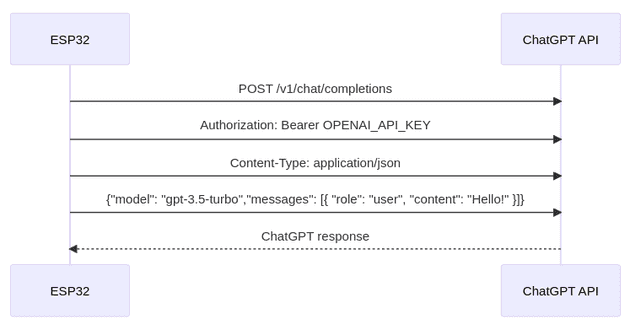

Now, let's explore the process of connecting the ESP32 with ChatGPT using HTTP. This involves sending a user's message to the Chat Completions API and receiving the response to provide a conversational experience.

Setting up the Hardware

Ensure that you have an ESP32 development board and establish a connection between the board and your computer using a USB cable. Install the necessary drivers to program the ESP32.

Install the Required Libraries

To streamline development, we need to install the essential libraries mentioned earlier: WiFi, WiFiClientSecure, and ArduinoJson. These libraries play a pivotal role in enabling the ESP32 to communicate with the Chat Completions API.

Create an OpenAI Account and Obtain API Key

To access GPT-3.5 and Chat Completions capabilities, you must sign up for an API key on the OpenAI platform. This key acts as a unique identifier and allows your chatbot to interact with the GPT-3.5 and Chat Completions securely.

The Arduino Code

In our ESP32-GPT- 3.5 integration code, we make use of the Chat Completions API by sending HTTP POST requests from the ESP32 to the API's endpoint. The payload of these requests includes the user's message or prompt.

Here's a breakdown of each step:

-

The ESP32 initiates an HTTP POST request to the Chat Completions API endpoint.

-

The ESP32 includes the API key in the request header for authentication.

-

The request header also contains the "Content-Type" as "application/json" to indicate that the payload is in JSON format.

-

The payload sent to the Chat Completions APIcontains the model and the user's messages array.

-

The Chat Completions API processes the request, generating a response.

-

The Chat Completions API sends back the chatbot's response to the ESP32.

Code Implementation

First, just add the required libraries. The WiFi.h library handles Wi-Fi connections, ArduinoJson.h simplifies working with JSON data, and WiFiClientSecure.h enables secure HTTPS communication:

#include <WiFi.h>

#include <ArduinoJson.h>

#include <WiFiClientSecure.h>Now, in the credentials.h file, you will define your Wi-Fi credentials (ssid and password) to connect your ESP32 to your Wi-Fi network and also provide your OpenAI API key (api_key) for authentication.

#ifndef CREDENTIALS_H

#define CREDENTIALS_H

const char* ssid = "";

const char* password = "";

const char* api_key = "";

const char* host = "api.openai.com";

const int httpsPort = 443;

#endif //CREDENTIALS_HNow, we've created a connectToWiFi() function and will help us to connect our ESP32 to the WiFi Network. The next function is *bool sendHTTPRequest(String prompt, String result); and basically make an HTTP request to the Chat Completions API, we need to pass the user prompt and the, we'll receive the response.

Finally, we have a wrapper function, String getGptResponse(String prompt, bool parseMsg), behind the scenes, this function will run the sendHTTPRequest, grab the result and parse the message using the ArduinoJSON library.

In the setup() function, the ESP32 initializes serial communication and connects to Wi-Fi.

This code effectively demonstrates the process of sending a user message to the ChatGPT API and receiving the chatbot's response using the ESP32.

void setup() {

Serial.begin(115200);

connectToWiFi();

}In the loop() function, we'll check if new prompt is arrived from Serial Monitor, then we'll parse it and call the getGptResponse function by passing the user prompt.

void loop()

{

if (Serial.available() > 0)

{

String prompt = Serial.readStringUntil('\n');

prompt.trim();

Serial.print("ESP32 > ");

Serial.println(prompt);

String response = getGptResponse(prompt);

Serial.println("ChatGPT > " + response);

delay(3000);

}

delay(10);

}The full code is provided below:

#include <WiFi.h>

#include <WiFiClientSecure.h>

#include <ArduinoJson.h>

#include "credentials.h"

WiFiClientSecure httpClient;

/* Function to connect to WiFi */

void connectToWiFi();

/* Function to make HTTP request*/

bool sendHTTPRequest(String prompt, String *result);

/* Function to pass user prompt and send it to OpenAI API*/

String getGptResponse(String prompt, bool parseMsg = true);

void setup()

{

Serial.begin(115200);

connectToWiFi();

}

void loop()

{

if (Serial.available() > 0)

{

String prompt = Serial.readStringUntil('\n');

prompt.trim();

Serial.print("ESP32 > ");

Serial.println(prompt);

String response = getGptResponse(prompt);

Serial.println("ChatGPT > " + response);

delay(3000);

}

delay(10);

}

void connectToWiFi()

{

WiFi.begin(ssid, password);

while (WiFi.status() != WL_CONNECTED)

{

delay(1000);

Serial.println("Conectando a WiFi...");

}

Serial.println("Conexión WiFi exitosa");

}

bool sendHTTPRequest(String prompt, String *result)

{

if (WiFi.status() != WL_CONNECTED)

{

Serial.println("ERROR: Dispositivo no connectado a WiFi");

return false;

}

// Connect to OpenAI API URL

httpClient.setInsecure();

if (!httpClient.connect(host, httpsPort))

{

Serial.println("Error al conectar con API OpenAI");

return false;

}

// Build Payload

String payload = "{\"model\": \"gpt-3.5-turbo\",\"messages\": [{\"role\": \"user\", \"content\": \"" + prompt + "\"}]}";

Serial.println(payload);

// Build HTTP Request

String request = "POST /v1/chat/completions HTTP/1.1\r\n";

request += "Host: " + String(host) + "\r\n";

request += "Authorization: Bearer " + String(api_key) + "\r\n";

request += "Content-Type: application/json\r\n";

request += "Content-Length: " + String(payload.length()) + "\r\n";

request += "Connection: close\r\n";

request += "\r\n" + payload + "\r\n";

// Send HTTP Request

httpClient.print(request);

// Get Response

String response = "";

while (httpClient.connected())

{

if (httpClient.available())

{

response += httpClient.readStringUntil('\n');

response += String("\r\n");

}

}

httpClient.stop();

// Parse HTTP Response Code

int responseCode = 0;

if (response.indexOf(" ") != -1)

{ // If the first space is found

responseCode = response.substring(response.indexOf(" ") + 1, response.indexOf(" ") + 4).toInt(); // Get the characters following the first space and convert to integer

}

if (responseCode != 200)

{

Serial.println("La petición ha fallado. Info:" + String(response));

return false;

}

// Get JSON Body

int start = response.indexOf("{");

int end = response.lastIndexOf("}");

String jsonBody = response.substring(start, end + 1);

if (jsonBody.length() > 0)

{

*result = jsonBody;

return true;

}

Serial.println("Error: no se ha podido leer la información");

return false;

}

String getGptResponse(String prompt, bool parseMsg)

{

String resultStr;

bool result = sendHTTPRequest(prompt, &resultStr);

if (!result) return "Error : sendHTTPRequest";

if (!parseMsg) return resultStr;

DynamicJsonDocument doc(resultStr.length() + 200);

DeserializationError error = deserializeJson(doc, resultStr.c_str());

if (error)

{

return "[ERR] deserializeJson() failed: " + String(error.f_str());

}

const char *_content = doc["choices"][0]["message"]["content"];

return String(_content);

}You can find the project repository here. The code is available for PlatformIO IDE and ArduinoIDE.

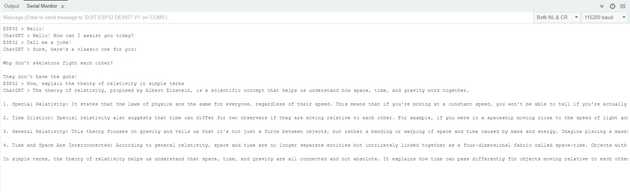

Testing the ESP32-GPT-3.5 Firmware

Now that we have implemented the ESP32-GPT-3.5 integration, it's time to test the firmware and witness the magic of AI-powered conversations. This playground section will guide you through the process of uploading the firmware to your ESP32 and observing the responses in the Serial Monitor.

Monitoring the Serial Output

- Connect your ESP32 to your computer via USB.

- Open the Serial Monitor in Arduino IDE (Tools > Serial Monitor) or PlatformIO IDE.

- Set the baud rate to 115200.

- You will see messages indicating Wi-Fi connection and interaction with the ChatGPT API.

Observing the ChatGPT Magic

As you watch the Serial Monitor, you'll see the ESP32 can chat with GPT-3.5 model. It will send your prompt to ChatGPT and then display the assistant's response.

Conclusion

Congratulations on successfully integrating the Arduino ESP32 with GPT-3.5 to build an interactive chatbot! We've explored the hardware setup, library installations, and the essential steps for creating the ESP32->GPT-3,5 integration by using the Chat Completions API connection with HTTP. This powerful combination of hardware and AI opens up exciting possibilities for creating innovative applications.

You can further enhance your chatbot by exploring more advanced features of GPT-3.5 model, adding natural language processing capabilities, and integrating other sensors to make the interaction even more dynamic.

Remember, this project is just the beginning. As technology continues to evolve, the fusion of hardware and AI will undoubtedly lead to groundbreaking innovations. Happy tinkering and building!